Published on

Individualized Emails Revisited: Putting the “Wider Net” Question to the Test

Two years ago, when Mark and Michael prepared to give a presentation at the Association for Institutional Research annual conference in Denver, they came across Zoe Cohen’s article in EvoLLLution titled “Small Changes, Large Rewards: How Individualized Emails Increase Classroom Performance.” Their topic was a novel Bayesian approach for determining gateway course grade cutoffs, and they were intrigued by Zoe’s success with email interventions, especially her suggestion that “perhaps, by throwing the net wider, I would have seen a greater increase in overall scores.” This “wider net” principle was consistent with one of their core messages that effective course grade cutoffs in gateway courses could be higher than the DFW levels typically practiced across the country. They referred to Zoe’s work in their presentation, but their curiosity remained piqued afterwards. They had to try this when they got back to campus.

They subsequently approached me about trying Zoe’s approach in my Elementary Statistics course, MATH 1703. This course has one of the highest fail rates of any course on campus, so the possibility that Zoe’s email technique could help more students pass was certainly appealing. But MATH 1703 is also a pre-requisite for high-stakes second-admit programs, most notably nursing, where simply passing the class isn’t normally sufficient. Students who earn less than an A or B are at a severe disadvantage for getting into those programs. This reality made the wider net question one worth asking. I agreed to give it a shot in my Spring 2020 and Fall 2020 classes.

Like Zoe, I sent personalized emails to students who failed the first exam, but not to all of them. To better isolate the intervention’s effect, the emails were sent to only half of the students who failed, while the other half served as a control group. This same approach was applied for emails sent to students who earned Ds, Cs and Bs on the first exam to put Zoe’s wider net question to the test.

We used the same basic message and tone as Zoe, adjusting the questions we asked to appropriately align with my course. We also added a “stretch factoid” that informed students how others with higher exam scores had performed on the homework for that unit. For instance, the email sent to students who scored an F contained the homework average for those who scored a C, while the message to D-scorers included the homework average for B-scorers, and so forth. This set a tangible and achievable goal for each student and established improving scores on homework as a good place to start for improving exam scores.

The response I received from students was very similar to what Zoe reported. Comments ranged from “Thank you so much for reaching out, it really means a lot” to “It’s nice to see professors who actually care.” The answers students provided to the questions posed gave me enough information to start guiding them on a personalized path to success, that is, offering them ways to improve based on what they’d been doing. The simple email opened up a line of communication, and students came to me more often throughout the semester, both in person or via email. This was especially helpful in the all-online sections or when the pandemic required moving in-person classes online. Sending a simple, individualized email let the students know I was available and willing, even in difficult times, to help them be more successful. But did it help in the end?

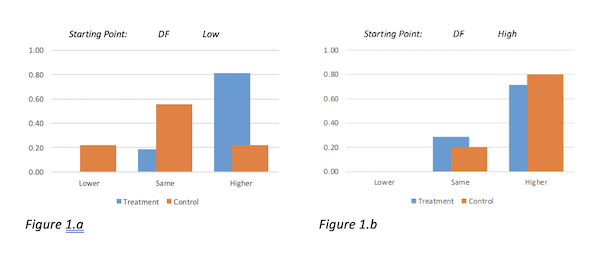

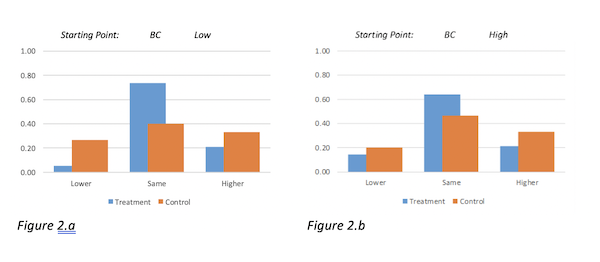

The subsequent data analysis was informed by a few observations about the nature of grades. First, we knew the F category was fundamentally different than other grades because of the much wider range of qualifying scores (0-59 points). The other grade levels had a narrower range of qualifying scores, so a student was never more than 10 points away from next grade up. Second, for all grade levels except F, a student could end up with a lower final grade than what they got on the first exam. While the intent of the intervention was to help students improve their final grades, this reality opened up the possibility that a successful intervention could be one that helped keep students from getting a lower final grade. Third, we recognized that all same-level grades are not created equal. Was it fair to compare the improvement characteristics of a student who earned an opening 62 D versus a student with an opening 68 D? We defined a “low grade” as one being 6 to 10 points away from the next grade up and a “high grade” as one being only 1 to 5 points away from the next higher grade. For the special case of F, we added a “very low” category for students more than 10 points away from a D.

What we found was that the intervention worked really well for students with “low grade” starting points, especially low Ds and low Fs, a scenario in which 80% of students in the treatment group ended the class with a higher grade compared to only 21% of those in the control group—a statistically significant result (Figure 1.a). Similar significant results were found for “very low” F starters. By contrast, the treatment didn’t work at all for students with a high D or high F starting point. It’s not that the treatment group students didn’t improve their grades—they did, but so did students in the control group and to a similar extent (Figure 1.b). High-grade students must have felt they were close enough to getting to the next level on their own. But for the low-end starters, the intervention provided them with the resources, encouragement and belief they needed to overcome the more daunting difference.

The intervention worked for low B and C students too, but differently. Far fewer students in either the treatment or control groups improved their grades, but the intervention seemed to help low B and C starters maintain their positions. Over 70% of low B/C starters in the treatment group ended with the same grade compared to only 40% of the control group, a statistically significant difference (Figure 2.a). For the high B/C starters, the pattern was similar, but the differences were smaller and insignificant (Figure 2.b). This unexpected finding suggests that even students who earned an A on the first exam might have benefited from an intervention. While A students were not included in this experiment, 36% of first-exam A students finished the class with a lower grade. An intervention cautioning against overconfidence and encouraging continued effort might have helped these students maintain their position, especially students aspiring to high-stakes second-admit programs, where a high grade is more critical.

Returning to Zoe’s original wider net question, it does appear that a greater increase in overall scores is possible, given sufficient resources. During our two-semester experiment, significant improvement was observed among both initial F and D students, but only for students with low and very low starting points. The surprise finding that low B and low C students were more likely to maintain their grade positions in response to the intervention caused us to rethink the possible outcomes and applications of the email technique.

In a broader context, these findings provide a good example of what Kristen Bilodeau described in her recent EvoLLLution interview as “personalizing the student experience” to be more in line with their motivations and goals. The individualized email technique begins with the intent of personalizing the experience, and seems to do a good job of it, but our experimental findings suggest that further personalization is possible. If the ideal student experience, according to Bilodeau, is “getting them what they want when they want it,” recognizing upfront that student motivations and goals may vary within a class can affect the way outreach is tailored to best fit their needs and wants.

In my class, directing an improvement message to low F and D students would likely be well received, but a grade maintenance message might be a more appropriate and effective approach for helping low C, B, and even A students succeed. Initial high-grade earners may be sufficiently self-motivated to reach their goals on their own.

Disclaimer: Embedded links in articles don’t represent author endorsement, but aim to provide readers with additional context and service.

Author Perspective: Administrator

Author Perspective: Educator