Published on

Validating Competencies and Skills Gained Through Rigorous Assessment

As a competency-based university, assessment is the backbone of everything we do. Quality, industry-verified tests allow us to confidently classify an individual as competent and prepared for workforce entry. Western Governors University (WGU) is different from the rest of academia in that assessments are central to how we validate skills and competencies. The traditional credit hour means little at WGU. Instead, students are able to demonstrate mastery through the use of high-quality assessments—objective tests, papers, simulations, clinicals or presentations. To best meet the needs of WGU’s nearly 120,000 students and the nation’s employers, the university must operate like a well-oiled machine. What follows is a glimpse into how WGU’s rigorous process for objective assessments ensures value to students and the employers who hire WGU graduates.

Purpose

Our work begins by specifying the test purpose. This involves answering two questions: How will the scores be used? And what are the associated stakes? Each of our exams is used to classify students as being either competent or not-yet competent with regards to having the skills necessary to enter a particular profession. We do not attempt to accurately measure students along the entire range of achievement or ability. Our focus is simply around one decision point. Students earn or fail to earn course credit based on their performance, so there are important consequences or stakes. These factors inform all subsequent decisions about test design such as test length, difficulty of test questions, testing conditions and score reports.

Linking Skills to Professions

Next, we perform what is broadly referred to as a Job Task analysis. This is how we ensure that the skills we measure matter on-the-job. Typically, surveys are conducted asking professionals to rate various skills in terms of how important they are to, and how frequently they are used on, the job. We have created a unique process whereby all underlying skills are connected across all courses and degree programs, so this activity does not occur during each test development, rather the results of this massive mapping are referenced.

Specifying What’s On The Test

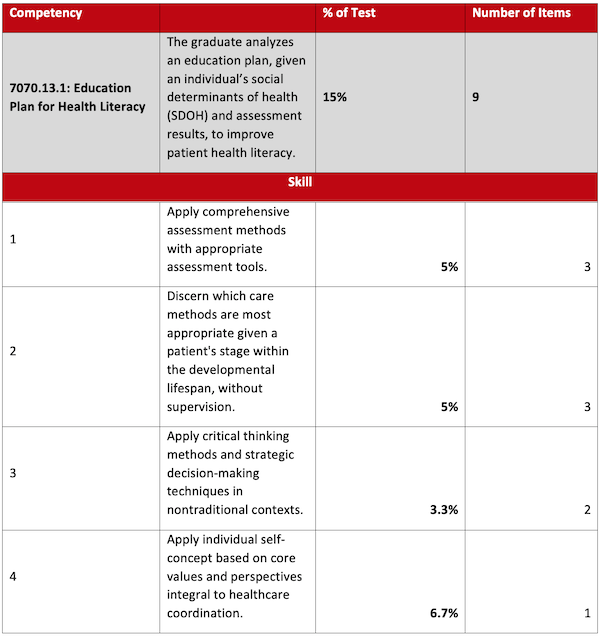

Next, test specifications are created. These specifications comprise a test description (who is the audience, what is the purpose, what inferences can be drawn from test results?) and a test blueprint. A test blueprint is exactly what is sounds like: It maps out what each test form must look like when being built. How many questions (items)? Which content areas do they come from? What type of items? The graphic below presents a section of a test blueprint for illustration purposes. It delineates for each competency and skill on a Health Literacy for the Client and Family test what proportion of the test it represents and the associated number of items.

The test blueprint is then made available to students and instructors so that they have solid expectations about how they will be evaluated. Students should not have to guess what is considered important when preparing for an exam. We are transparent so that students know what it important and how to prepare for their assessment, thereby reducing test anxiety.

Figure 1. Section of Sample Test Blueprint

Nursing 102: Health Literacy for the Client and Family

Item types: multiple choice (MC), multiple response (Check all that apply), fill-in-the-blank

Time: 60 minutes

Number of items: 60

Writing Items

After the blueprint is created, items are written. While most people, particularly teachers and professors, think they can write good items, it isn’t as easy as it sounds and WGU holds high standards in this regard. There is some science behind how to do it appropriately. There are entire books written and courses taught on this subject—just because one is a good teacher does not mean one can write good items. Poor items might have no correct answer, multiple correct answers, be vague and confusing, or be so specific they lack relevance. Only a subset of written items (often around 50%) pass all the tests we put them through and meet all the requirements for use. Assessment developers work with subject matter experts to construct a set of items to meet the test purpose. Here, test forms are not being created so one doesn’t have to worry about which items can or cannot go together; we are just building a supply (a “bank” or “pool”) of items from which to later choose. Several documents aid in this work, such as the blueprint, item development guidelines and style guides.

Review, Review and Review

Items then go through a number of quality reviews. Subject matter experts review the items across multiple rounds to make sure the content is accurate, relevant and in concert with curricular materials. Assessment experts review the items for clarity, and fairness and sensitivity. Copyeditors review the items for grammar, punctuation, spelling and style.

Test the Test

After an iterative process of review and revision, questions need to be tested with students or a similar group. This is called field testing and the purpose is to further evaluate the quality of the items. The best way to collect this data is to fold these items in with real items on some test without the participants knowing which ones count and which do not. This alleviates any concerns around motivation and helps ensure the statistics gathered will generalize once the assessment is live. This is not always possible, particularly when a new course is launched and the target population does not yet exist. The closest we can come in these instances is to use practitioners who closely resemble what our students will look like 1-2 years after graduation. These practitioners (who are often alumni) attended the same or a similar program as our students and serve as a proxy for them. Testing allows us to catch many problematic items before students suffer through them.

Analyze the Data: Psychometrics

A series of statistical analyses are conducted on these field test items to evaluate things like how difficult they are and how well they distinguish between competent and not-yet competent students. Importantly, these analyses also verify whether any subgroups of students perform differentially.

Items that do not meet pre-determined criteria are discarded completely or revised and subject to re-field testing. Only successful items can be used on forms and “counted” for students moving forward.

Build Test Forms

Test forms are now built using the items from the field test. The test blueprint dictates the composition of the test forms, and statistical characteristics are used to ensure that all alternate forms of a test are of comparable difficulty. We build multiple forms of our assessments and administer them randomly, so a student will get one of these forms and will not be advantaged or disadvantaged by the form they receive. Each form is interchangeable both in terms of content, format and statistical qualities. Having multiple forms aids in test security and allows for retake opportunities.

Determine the Passing Score

After the actual forms are built, we perform something called standard setting. This is the process of determining the level of performance required to demonstrate competency. Whereas in traditional classroom exams, a score of 60 or 70 is considered passing for all tests, in our model standard setting is test specific. Standard setting is informed by expert judgment, data and policy considerations. We use an innovative two-stage process where we use the data from practitioners to set an initial cut score and then follow up after a number of students have taken the assessment to determine if any adjustment is warranted.

Test the Students

The next step is to administer the test to students. Our standardized administration procedures ensure fair, reliable, valid and secure assessments. Students typically test from a personal computer using online proctoring services that mimic test centers through use of a webcam. Consistent procedures, including additional security measures to guard against cheating, allow score comparisons regardless of test date, location and other factors.

Score the Test and Report Results

Assessments are then computer-scored using consistent and replicable processes, which help support the validity of the assessment. Here, students earn official results of either competent or not-yet competent and information is provided regarding their relative strengths and weaknesses.

Monitor Results

Finally, after each assessment is launched, it is subject to ongoing evaluation and scrutiny. Both test- level analyses and item-level analyses are routinely conducted to ensure that students are getting fair, reliable and valid assessments. Several key performance indicators are tracked for each assessment and monitored to ensure that they are staying within the appropriate range. Performance of an assessment can change over time for many reasons, such as course content changes, information becoming outdated (e.g., tax law changes), or security breaches (i.e., cheating). As part of this continuous monitoring, poor performing items are replaced, and new items are field tested so that new forms can be built.

It takes a lot of time, effort, and expertise to build, administer and monitor assessments. It’s not something that we take lightly. WGU is a student-centered university, and we go to great lengths to ensure that the credentials our students earn are high-quality, industry-validated and credible in the marketplace. WGU’s sole focus is on student learning and success in the workforce, so the rigor of our assessments is directly linked to the quality and value of the credentials our graduates earn.

Author Perspective: Administrator